KubeCon NA recap. Insights on observability cost, telemetry quality and pipeline innovation

KubeCon North America was a clear reminder of just how hard managing telemetry has become. Our booth leaned into the theme of “telemetry whack-a-mole,” the constant game DevOps and engineers are forced to play as issues pop up faster than they can be fixed. Across our white suits, we introduced the “Telemetry Mole Patrol”, a nod to our mission to end this cycle of waste and give teams clearer, more reliable visibility.

During the conference, we connected with hundreds of engineers facing the same battles: skyrocketing observability costs, noisy logs, brittle pipelines, and endless firefighting to maintain data flow. It's clear that the telemetry problem is intensifying, and teams everywhere are seeking smarter solutions for observability cost reduction and OpenTelemetry pipeline management.

Key insights from conversations during the event, discussions with platform teams, SREs, and observability leads highlighted three critical pain points:

- Costs are exploding. Teams are overwhelmed by unnecessary logs, metrics, and traces, incurring millions in expenses for data that delivers minimal value. If you're wondering how to reduce observability costs or how to reduce Datadog costs, you're not alone. Many are looking to reduce telemetry volume to save money and cut down log ingestion bills.

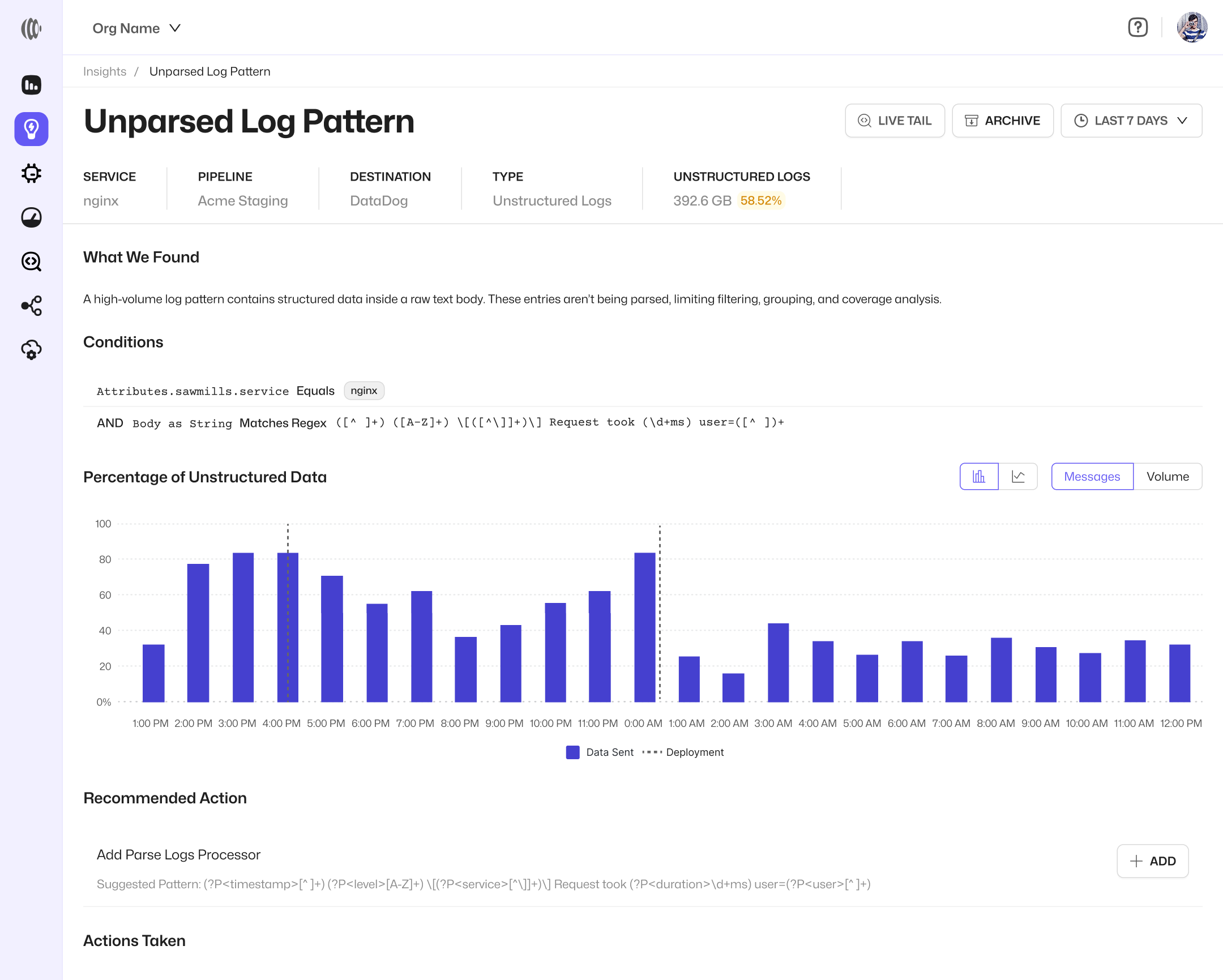

- Data quality is inconsistent. Unstructured logs, missing attributes, and poor context hinder effective troubleshooting and automation. Filtering out noisy logs and applying filters before sending are essential steps toward better observability cost governance. But that's just the beginning. AI is being under leveraged as a tool to fix the problem.

- Pipelines are fragile. Even minor changes to the OpenTelemetry Collector often demand restarts or rollouts, risking data loss and dashboard disruptions. This fragility underscores the need for advanced telemetry pipeline tools.

Spotlight on Amir Jakoby's Session: Zero-Downtime Telemetry

We were delighted to have the opportunity to be selected to speak at KubeCon. Sawmills' CTO Amir Jakoby's spoke alongside JFrog's Shiran Melamed in a talk entitled Zero-Downtime Telemetry: Hot Reloading OpenTelemetry Collector Pipelines.

In cloud-native environments, adaptability is key, yet configuring the Collector typically invites risks like restarts, data gaps, and reliability issues. Amir and Shiran unveiled a game-changing hot reload feature for the OpenTelemetry Collector, enabling dynamic reconfiguration of processors, filters, samplers, and transformers without any downtime.

Attendees gained actionable insights into:

- Hot-swappable processors that activate instantly on pipeline updates

- Seamless, zero-downtime adjustments

- Uninterrupted data streams during changes

- More robust, self-adapting telemetry architectures

For those frustrated by deployment delays or pipeline tweaks, this demonstrated the future of OpenTelemetry pipeline management. It's a perfect complement to AI-powered telemetry solutions like Sawmills, where we go further by automating waste detection, schema fixes, and remediation to address high cardinality metrics best practices and reduce custom metrics cost.

Check out the talk:

Embracing the Shift to Smarter Telemetry Management Powered by AI.

KubeCon reinforced that the industry is evolving past whack-a-mole tactics toward intelligent pipeline control. With Sawmills, teams can:

- Automatically identify waste, noise, and schema problems

- Remediate issues in-stream without restarts

- Enhance reliability and signal quality

- Dramatically lower observability costs

- Provide the missing control layer for DevOps

You don't have to choose an alternative to Datadog, New Relic, Grafana, or Loki. Instead, you can apply intelligent telemetry management on top. We're not another observability platform; we're the upstream solution for telemetry sovereignty and cost control. Explore how Sawmills can transform your telemetry pipeline today.

-1.png)